Recent News

March 6 seminar: Viktoriia Babicheva

March 4, 2026

February 27 seminar: Stavroula Foteinopoulou

February 25, 2026

February 13 seminar: Francisco Riberi

February 9, 2026

February 6 seminar: Marios Pattichis

February 4, 2026

News Archives

Prof Co-Writes Book He Wished He Owned In 2000

March 20, 2018

ECE Professor Manel Martínez-Ramón is co-author of a newly published textbook called "Digital Signal Processing with Kernel Methods."

This 672-page book was published in February 2018 by John Wiley & Sons and is now available as an e-book, hardcover or o-book. It is advertised by its publishers as "a realistic and comprehensive review of joint approaches to machine learning and signal processing algorithms, with application to communications, multimedia, and biomedical engineering systems."

We recently spoke to Dr Martínez-Ramón (M-R) about "Digital Signal Processing with Kernel Methods."

ECE: So then, what exactly is digital signal processing?

M-R: Signal processing is a field at the intersection of systems engineering, electrical engineering, and applied mathematics. The field analyzes both analog and digitized signals that represent physical quantities. Signals include sound, electromagnetic radiation, images and videos, electrical signals acquired by a diversity of sensors, or waveforms generated by biological, control, or telecommunication systems, just to name a few.

The word “digital” derives from the Latin word digitus for “finger,” hence indicating everything ultimately related to a representation as integer countable numbers. DSP technologies are today pervasive in many fields of science and engineering, including communications, control, computing and economics, biology, or instrumentation.

ECE: What is a kernel method and why is that important to digital signaling processing?

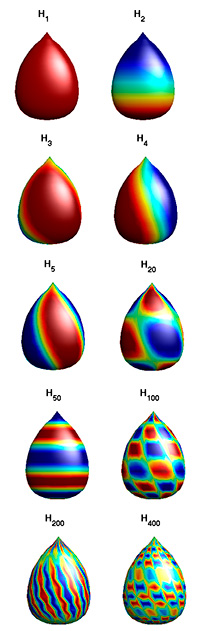

M-R: Roughly speaking, a Mercer’s kernel in a Hilbert space is a function that computes the inner product between two vectors embedded in that space. This informal definition is formalized through the Mercer’s Theorem, which gives the analytical power to kernel methods. Their properties have allowed kernel methods to become a preferred tool in machine learning and pattern recognition for years.

The use of kernels allows the extension of classical linear signal processing algorithms to endow them with nonlinear properties, but also keeping the nice properties of liner algorithms, including regularization.

Kernel methods have been widely adopted in applied communities such as computer vision, time series analysis and econometrics, physical sciences, as well as signal and image processing because they lead to neat, simple, and intuitive algorithms. Also, kernel methods allow exploiting a vast variety of functions that can be designed and adapted to the application at hand. For example, combining and fusing heterogeneous signal sources (text, images, graphs). Also, learning the kernel function directly from data is possible via generative models and exploitation of the wealth of information in the unlabelled data. Kernel design is an open research topic in machine learning and signal processing that does not stop giving theoretical results and designs for many different applications.

ECE: How did you lay out the foundations for this book?

M-R: My colleagues and I worked together for years in growing and designing kernel algorithms guided by the robustness requirements from the signals and systems in our application fields. We studied other works around these fields, and some of them were really inspiring and useful in our signal processing problems. We wrote some tutorials and reviews along these lines, aiming to put together the common elements of the kernel methods design under signal processing perspectives.

ECE: How did these tutorials and reviews evolve into a book?

M-R: We were not satisfied with the theoretical tutorials because the richness of the landscape was not reflected in them. This is why we decided to write a book that integrated the theoretical fundamentals, put together representative application examples and, if possible, to include code snippets and links to relevant, useful toolboxes and packages.

ECE: What are your feelings about this book now that it is published?

M-R: This is, in some sense, the book we would have liked in the 2000s for ourselves. This book is not intended to be a set of tutorials, nor a set of application papers, and not just a bunch of toolboxes. Rather, the book is intended to be a learning tour for those who like and need to write their algorithms, who need these algorithms for their signal processing applications in real data, and who can be inspired by simple yet illustrative code tutorials.

ECE: Tell us a little bit about your co-authors (José Luis Rojo-Álvarez, Jordi Muñoz-Marí, Gustau Camps-Valls) and how you happened to become involved with them.

M-R: As usual in our community, I met these colleagues after getting engaged in random technical discussions that led to many collaborations over the past 15 years. In particular, I met Dr. Rojo over a printer. I saw a paper being printed with some equations that were familiar to me, and then I exclaimed: “But…this is totally wrong!” A guy behind me challenged me: “What you mean, wrong?” Eventually, we rewrote and published the paper and experiments and became friends forever. Some other young guy, with a growing reputation (Dr. Camps-Valls) paid a visit to my university, and we started discussing machine learning. He had another colleague in his university (Dr. Muñoz-Mari). The four of us produced papers, drank many beers and had a lot of fun for years. One of these nights, Gustau said: do you guys dare to write a book out of all these stuff? And we answered:

“Yeah, hold my beer!”

They are among the most prominent researchers in their areas in the Spanish community and they have grown an impressive reputation among their peers at an international level, so I am proud of being a co-author with them. In particular, Dr. Rojo is well known in applications of machine learning to cardiology problems, and Drs. Muñoz-Mari and Camps-Valls are very well known by their joint works in the application of machine learning to remote sensing. Together have hundreds of journal papers and (the equivalent of) several million dollars in research grants.

ECE: How will this book appeal to those involved with machine learning and pattern recognition?

M-R: Machine learning is in constant cross-fertilization with signal processing and thus the other way around has been also satisfied in the last decade. New machine learning developments have relied on achievements from the signal processing community. Advances in signal processing and information processing has given rise to new machine learning frameworks as Sparsity-aware Learning, Information-theoretic Learning, and Adaptive filtering.

Machine learning methods in general and kernel methods, in particular, provide an excellent framework to deal with all the jungle of algorithms and applications. Kernel methods are not only attractive for many of the traditional DSP applications such as pattern recognition, speech, audio, and video processing. Nowadays, as is be treated in this book, kernel methods are one of the primary candidates for emerging applications such as brain-computer interface, satellite image processing, modeling markets, antenna’s and communication network design, multimodal data fusion and processing, behavior and emotion recognition from speech and videos, control, forecasting, spectrum analysis, and learning in complex environments such as social networks.